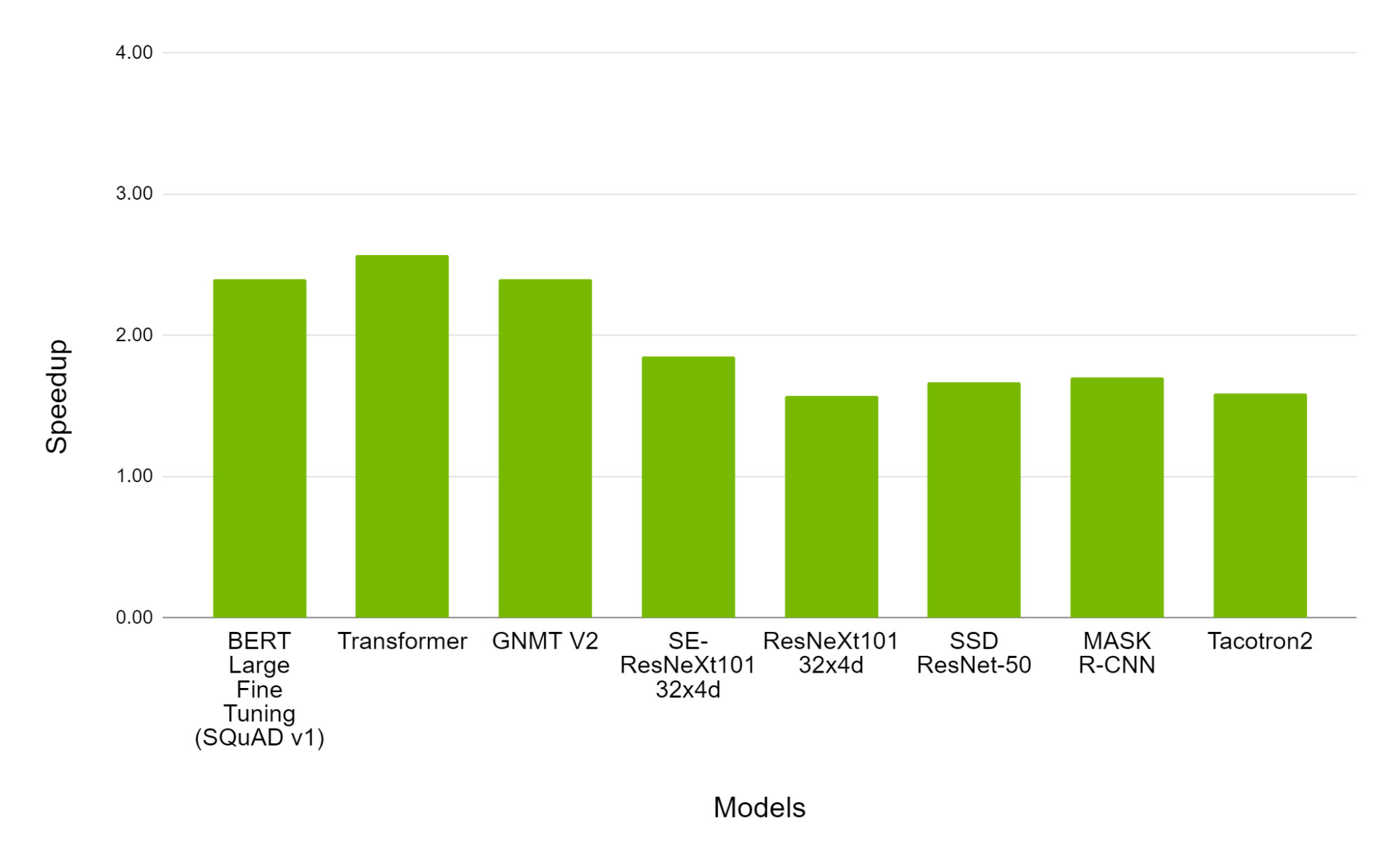

FPGA's Speedup and EDP Reduction Ratios with Respect to GPU FP16 when... | Download Scientific Diagram

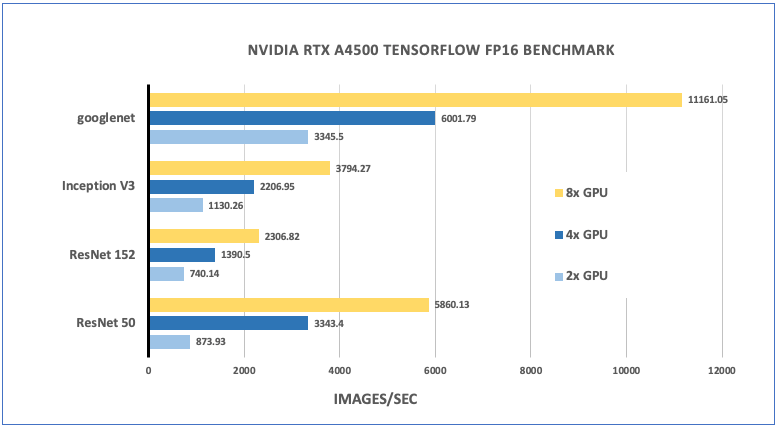

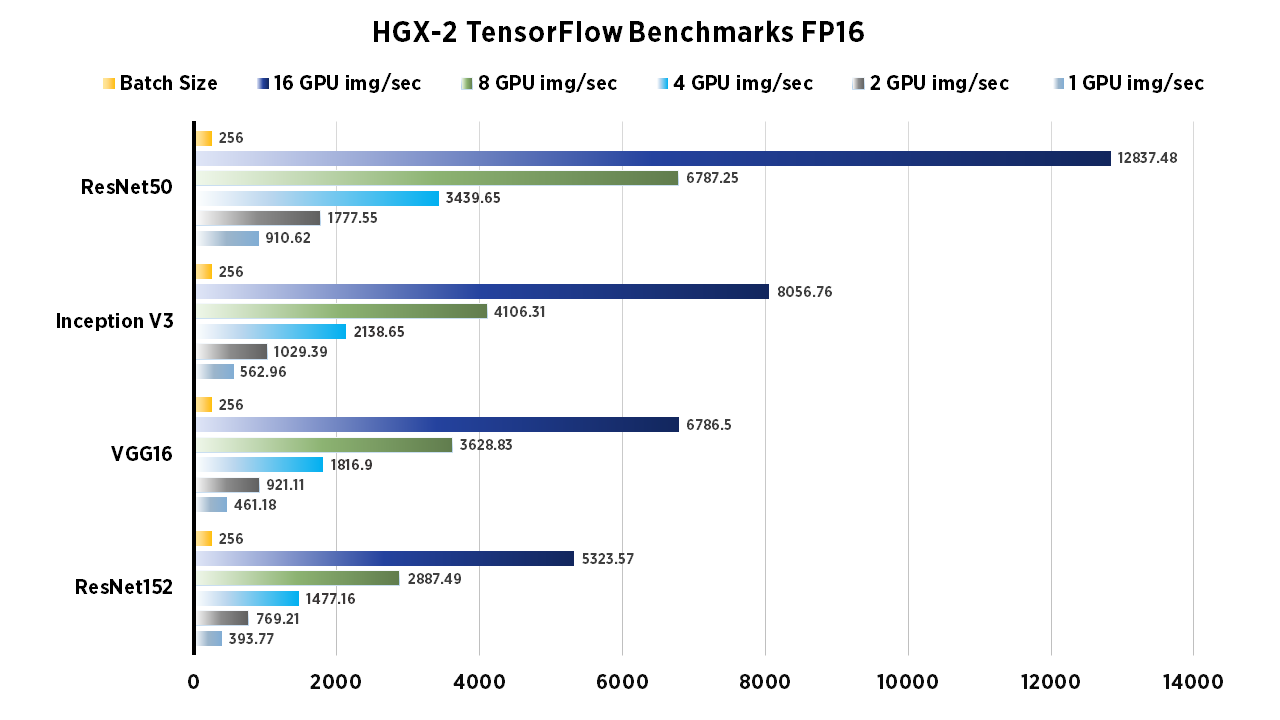

Supermicro Systems Deliver 170 TFLOPS FP16 of Peak Performance for Artificial Intelligence and Deep Learning at GTC 2017 - PR Newswire APAC